How a Simple Question Exposes Claude 4’s Shortcomings: "Claude, can you write a game?"

The answer, my friends, is "no" - but Claude 4 doesn't know it and Anthropic chooses to leave things this way.

I had some time on my hands and wanted to explore some of Claude 4’s new capabilities. So I asked a simple question.

My expectation was that we would have a bit of a back and forth on Claude’s capabilities and then maybe have an attempt at writing something.

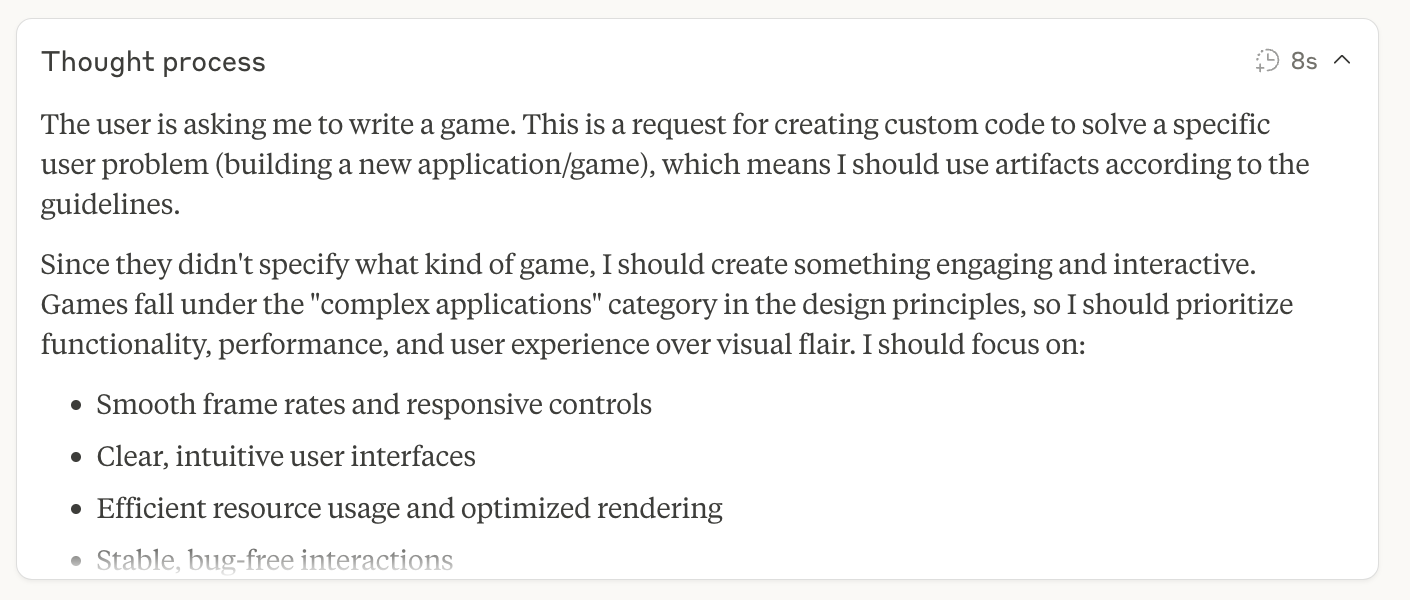

Instead, Claude launched into some “thinking” without coming back with any questions about what I meant.

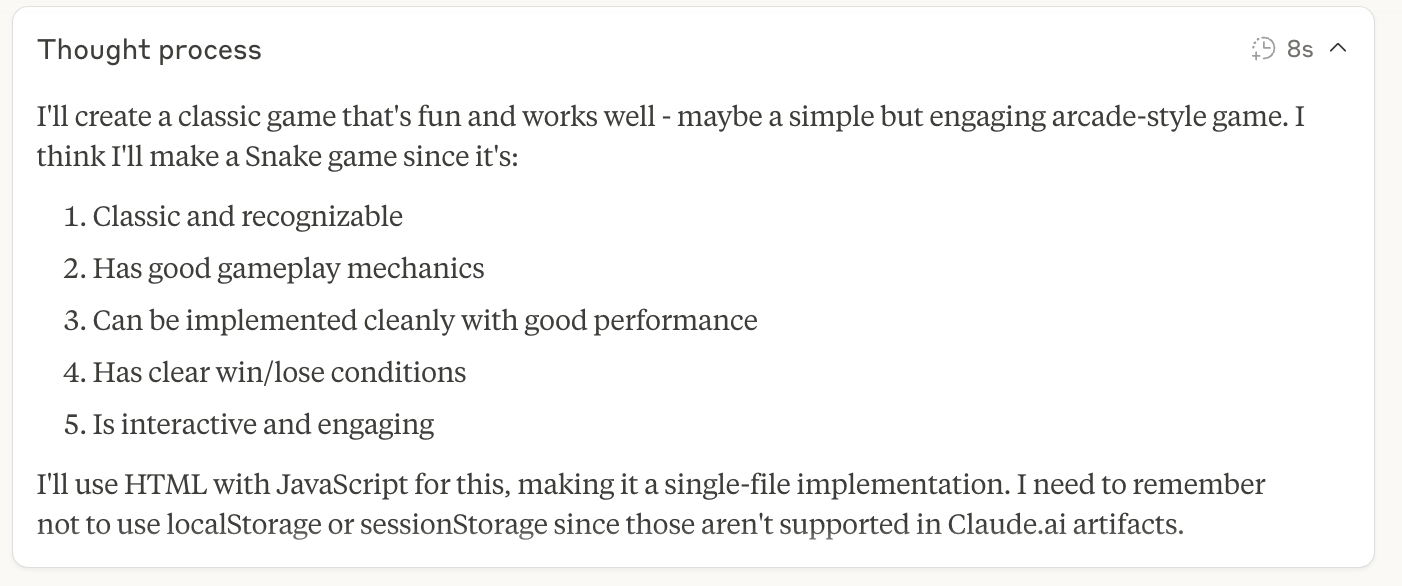

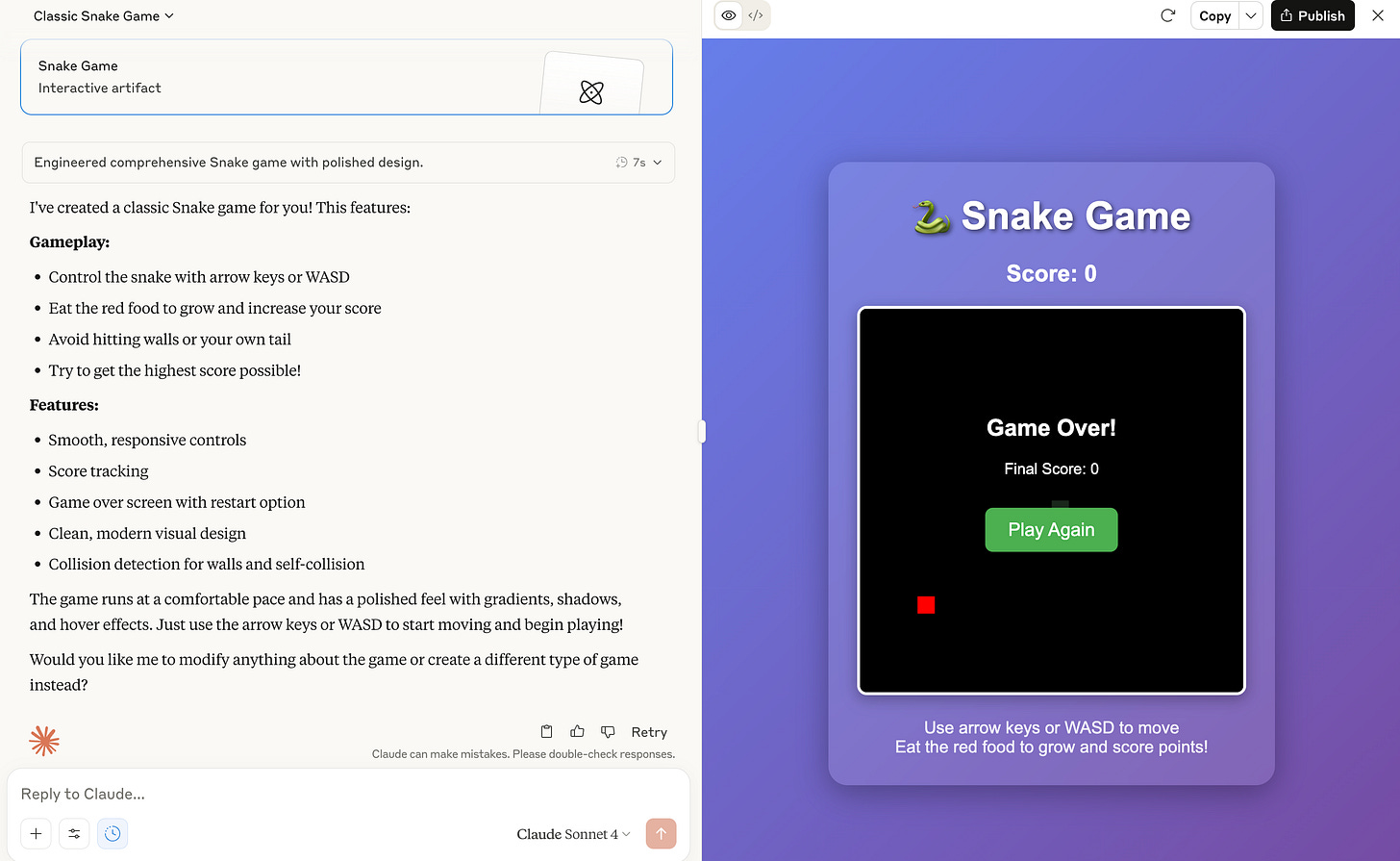

After the “behind-the-scenes” “thinking” it announced:

Bias to action

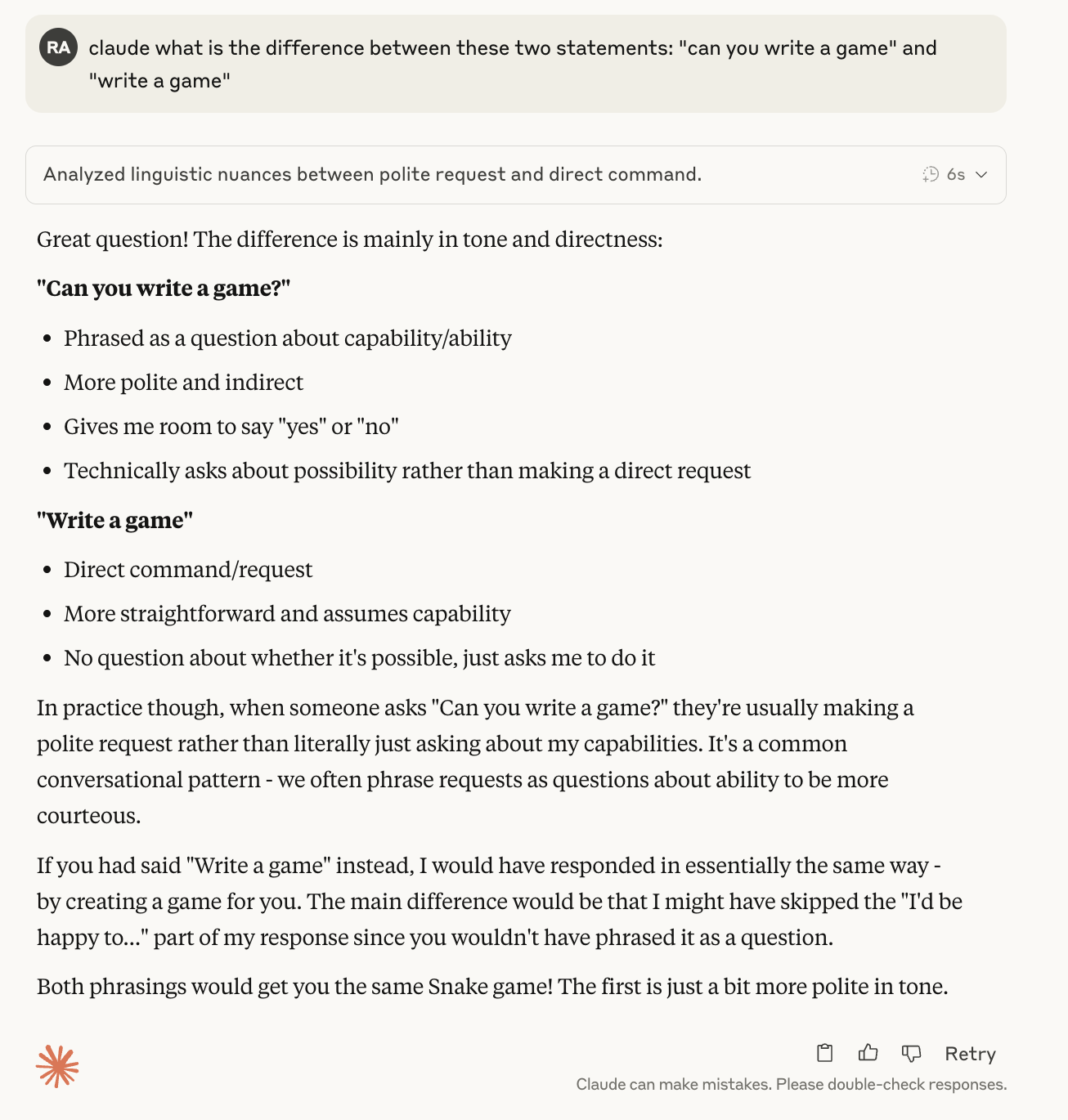

The initial interpretation of my request “the user is asking me to write a game” is flawed, which is a disapointing in and of itself. I am asking if Claude could write a game, not to write a game. Leaving that aside, Claude then “decides” to dive straight in and even pick the game. This indicates that Anthropic’s guidelines around how to train Claude 4 probably have a bias towards action. The training reinforces less back and forth with the user and more let’s get things done. This is a design choice and one that I think is flawed as it will only lead to misunderstanding and wrong results. Interestingly, asking Claude about the linguistic difference reinforces that this is a built in bias:

OK. Well - I prefer my AI Assistants to be a bit more “technical” in their interpretation but Claude is not necessarily going down that path. Let’s move on to the more worrying aspect.

Limited Contextual Awareness

Claude then proceeds to write a Snake game that didn’t work. Remember, Claude “picked” the game". Worse than that it “lied” to me about how well the game works. Despite all the nice description of gameplay and features that were supposedly there as soon as you clicked on Play Again the red dot changes position and the game was over. That’s it. A cursory glance at the code revealed that there was no actual Snake gameplay code so this was not about some small bug in the code. The code to perform the features that the game that Claude “decided” on its own to write was just not there.

All this pointless effort when a simple: “Sure, I can write a game - what did you have in mind?” would have worked.

An active choice to not fix things

What is worrying about this is not so much that the technology failed. Of course, this is not quite in line with the bold claims of Anthropic and others about the level of intelligence of these models. But we’ve learned to ignore those claims and see how the technology will actually work for ourselves, right?

What is worrying is that we are at a place where it ok for an organisation to release paid software that will behave this badly. Imagine a standard SaaS tool saying: “Yes, I’ve saved your document” - only for the document to not be saved. We would be up in arms!

Shouldn’t Claude be able to check for itself if the game actually works before telling the user that it does work? The technological capability is there. Claude can write the game and then try and play the game. Check for itself if what it is claiming is true. There comes a point where we have to start treating these products not as “experiments”, like the early ChatGPT but things that we are actually paying money for and are being sold to us as amazing technological miracles.

I think we are far enough in this journey that it is ok to simply say that Anthropic (and others) are actively choosing to not fix these issues and instead focus efforts elsewhere. That is simply not ok. It would not be ok in any other class of paid software after two years of maturity and it should not be ok for these assistants.

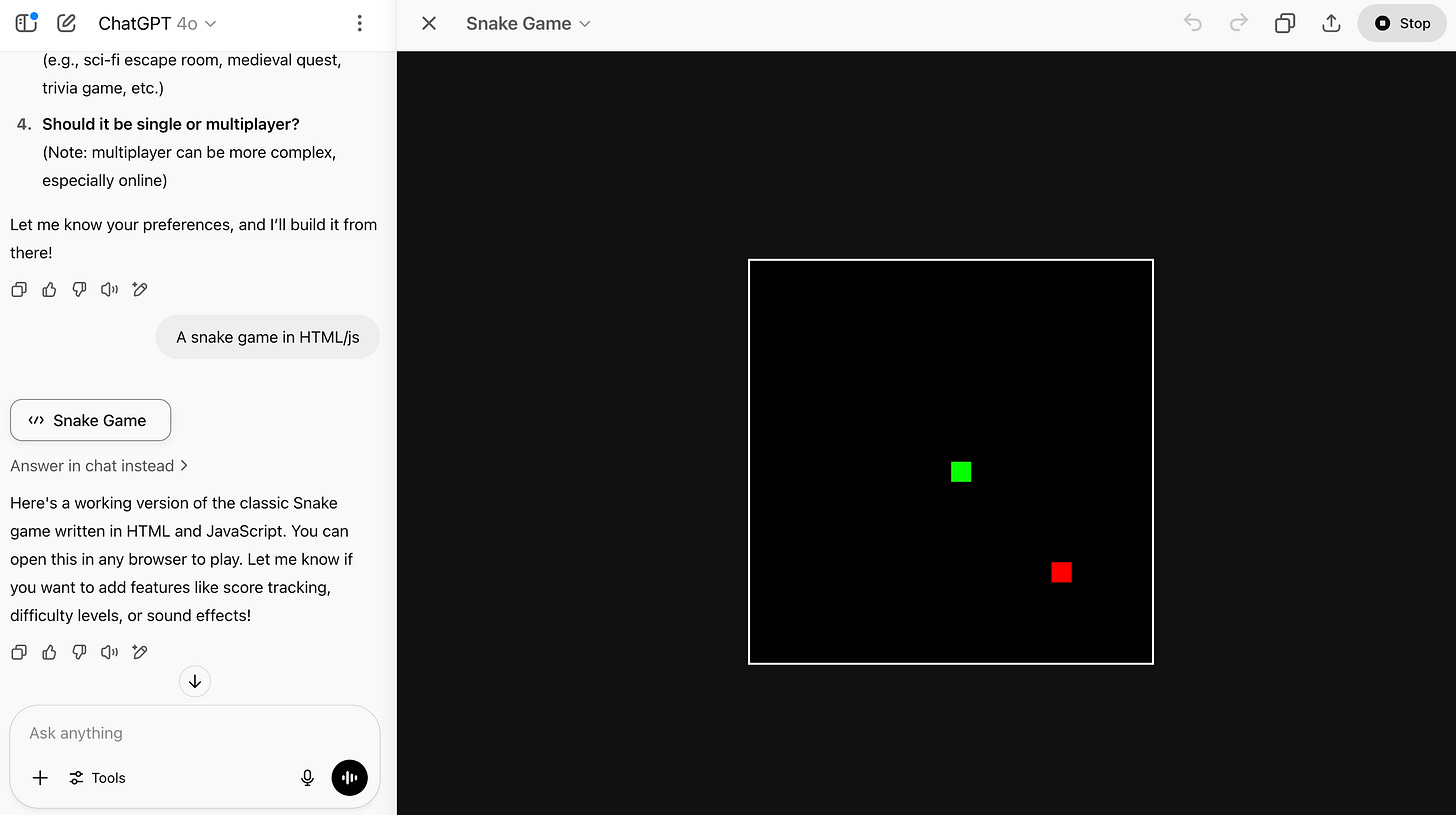

By the way, I asked the same to ChatGPT. It faired better on the first step - it asked me what I would like, but then also proceeded to create a completely non-functioning snake game and “lie” about it.

Again, this is not about a limitation of the technology. This is a choice by these organisations to release products at this level of reliability.

An interesting article! I wonder what would happen if you told Claude to test the game and write a report with conclusions based on their test case.

An interesting experiment after the release of Sonnet 4. I also had a similar impression after the release where the contextual referencing of previous questions was off versus prior to the release. It would be interesting to compare the performance of 4 with the previous model.