👋 CIAO Core Components: Agents - How to Design AI Agents by Starting with the Problem, Not the Technology

We're building sophisticated systems on shaky conceptual foundations - separating problem space from solution space helps bring order.

CIAO👋 is a unifying architectural framework for AI-powered applications that organizes systems into four core components: Conversations (managing dialogue state), Interfaces (handling human interaction), Agents (automated decision-makers), and Orchestration (coordinating everything).

The framework prioritizes "what" applications need to do (like understanding natural language or reasoning about actions) over "how" they're implemented (specific technologies like LLMs), allowing systems to adapt as technologies evolve.

It's designed for the new generation of software that is conversation-centric, handles multiple input/output types, and features increasing levels of automated decision-making.

Check out the introduction, our deep dive into Conversations, Interfaces or read on here for Agents.

Introduction

The explosion of interest in AI agents has created an unexpected problem. In the rush to build "agentic" systems, we've adopted the terminology without developing the structured thinking to go with it. Every AI-powered application is now described as having "agents," but ask ten developers what makes something an agent and you'll get eleven different answers.

This rapid adoption has led to confusion that slows down development and complicates communication. Teams struggle to articulate what their agents actually do versus what they need them to do. Architecture decisions are made based on hype or FOMO rather than systematic analysis.

We're building sophisticated systems on shaky conceptual foundations.

Agents went from a relatively quiet corner of AI research to the forefront of how we describe LLM-powered applications in less than two years. But in our eagerness to build, we've skipped the crucial step of developing a coherent framework for thinking about them. In this article, we continue fixing that by establishing a structured approach to understanding Agents as a core component of your AI-powered application.

Why is the term agent useful?

Let’s start here. Why do we even need to use this term? We’ve been describing applications (automated or not) without referring to agents for decades. What’s changed?

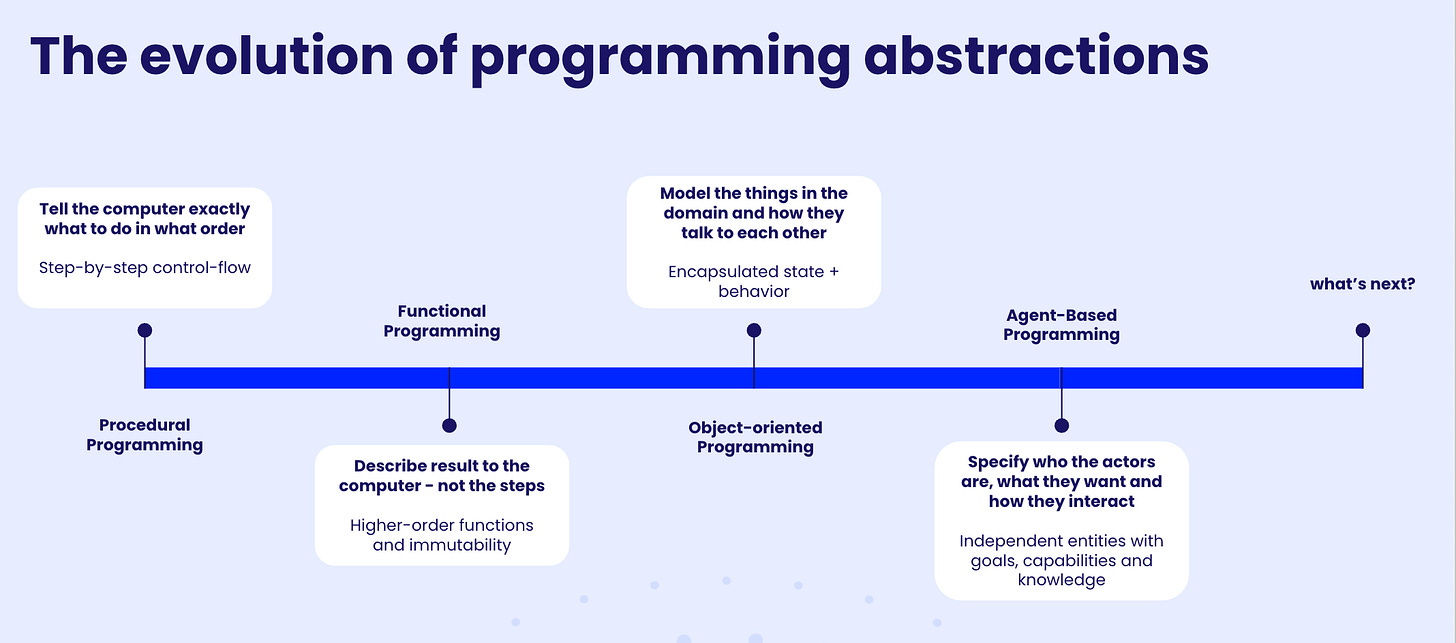

For me the core utility comes from having a better abstraction to describe your application. Using the right abstraction brings clarity, leads to more robust designs, improves communication across the team and speeds up development. We are clearly building a new type of software, with levels of automony that were not possible before so using a new abstraction is entirely sensible.

Of course, it is not enough to just say we are using the abstraction of agents. We then need to build up a set of concepts and the relationships between those concepts that give us a coherent way of describing and comparing a range of different solutions.

As we mention in the intro a core tenet of 👋CIAO is that we clearly distinguish the what from the how. We need to distinguish the problem space from the solution space. The problem space is defined by what you are trying to solve. The solution space is defined by the various options in terms of how you are trying to solve it.

Let’s get going!

Designing agents starting from the problem space

Separating what must be solved from how we choose to solve it remains one of the simplest, most useful lenses for AI system design. By first cataloguing the agent-independent properties of a task and its environment, we can judge inherent difficulty before deciding whether to deploy a rules engine, a model-based planner, or a large language model. Conversely, listing agent-dependent properties clarifies exactly which architectural knobs we can turn to meet those demands.

Agent-Independent (Problem-Space) Dimensions

These characteristics exist before any particular agent shows up:

Task / Goal Characteristics – What is the scope, the domain, and the baseline complexity?

Example: route-planning for a delivery van versus playing chess.

Goal Verifiability – Can success be measured objectively, or does it rely on subjective judgment?

Check-mate is clear-cut; “best vacation plan” is not.

Environment Properties – How observable is the world? Is it deterministic or stochastic? Static or dynamic? Discrete or continuous?

A chess board is fully observable and static; a stock market is partially observable and stochastic.

Multi-Agent Context – Are we in a multi-agent setting? Is that setting cooperative, competitive, or mixed setting?

Self-driving fleets cooperate; high-frequency traders compete.

Baseline Temporal Constraints – What deadlines does the external world impose?

The exchange’s one-second trading tick leaves little room for deliberation.

Intrinsic Safety & Alignment Risk – How much harm could failure cause?

Incorrect medical-dose planning carries far more risk than mis-classifying a meme.

Required Steps/Plan Complexity – Does achieving the goal follow a fixed sequence, or require dynamic adaptation?

Assembling furniture follows instructions; navigating rush-hour traffic requires constant replanning.

These dimensions help us understand the fundamental difficulty of a problem before we even consider what kind of agent architecture to deploy. A task that scores high on stochasticity, has low goal verifiability, operates in a competitive multi-agent environment, and carries significant safety risks presents inherent challenges that no agent architecture can simply wish away.

Agent-Dependent (Solution-Space) Dimensions

Once we understand the problem space, we can examine the architectural choices that determine how well an agent might handle it:

Learning Capabilities – Can the agent improve through experience, or is its behavior fixed?

From simple parameter tuning to sophisticated meta-learning and continual learning without forgetting.

Autonomy Level – How much human oversight does the agent require?

Following thinking similar to the SAE levels (0-5), we can reason about the level of autonomy from human-controlled with agent assistance to fully autonomous operation.

Planning Architecture – How does the agent decide what to do?

Reactive (stimulus-response), deliberative (model-based planning), or hybrid approaches.

The choice often depends on temporal constraints from the problem space.

Coordination Capabilities – Can the agent work with others?

Communication protocols, negotiation strategies, and team formation abilities.

Critical when the problem space includes multi-agent contexts.

Computational Architecture – What resources does the agent need?

Edge devices with millisecond response but limited compute, or cloud-based with powerful models but network latency.

Memory requirements, energy consumption, and bandwidth constraints.

Perception and Action Capabilities – What can the agent sense and do?

Sensor modalities (text, vision, audio, proprioceptive).

Action space (discrete choices, continuous control, communication acts).

Internal representations (symbolic, neural, hybrid).

Temporal Processing – How does the agent handle time?

Real-time responsiveness versus batch processing.

Planning horizons from immediate reactions to long-term strategic thinking.

Memory architecture for maintaining context over extended interactions.

Bringing it together: Matching solutions to problems

The power of this separation becomes clear when designing systems. Let's examine two contrasting examples:

Example 1: Recipe Recommendation Agent

Problem Space Analysis:

Goal verifiability: Low (taste is subjective, success depends on user satisfaction)

Environment: Mostly observable, deterministic (ingredient availability, dietary restrictions are known)

Safety risk: Low (worst case: unenjoyable meal, unless handling allergies)

Temporal constraints: Relaxed (users can wait 10-30 seconds for suggestions)

Multi-agent context: None (single user interaction)

Plan complexity: Moderate (matching ingredients, techniques, time constraints)

Solution Space Decisions:

Learning: Basic preference learning from user feedback

Autonomy: Level 4 (agent suggests, user can modify)

Planning: Simple deliberative (filter → rank → present options)

Computation: Can run entirely on edge devices

Perception: Text input, possibly image recognition for ingredients

Coordination: Not needed for basic version

This is a forgiving problem space where we can experiment with higher autonomy and simpler architectures. The low stakes allow for learning through trial and error.

Example 2: Medical Diagnosis Agent

Problem Space Analysis:

Goal verifiability: Mixed (some conditions have clear tests, others require judgment)

Environment: Partially observable, stochastic (hidden symptoms, disease progression)

Safety risk: High (misdiagnosis can be fatal)

Temporal constraints: Moderate (emergency vs. routine care)

Multi-agent context: Cooperative (must work with healthcare team)

Plan complexity: High (differential diagnosis, test ordering, treatment planning)

Solution Space Decisions:

Learning: Continual learning to incorporate new medical knowledge

Autonomy: Level 2-3 (agent assists, human decides)

Planning: Hybrid (quick triage reactions + deliberative diagnosis)

Computation: Cloud-based for complex reasoning, edge for emergency triage

Perception: Multi-modal (text, images, lab results, vital signs)

Coordination: Must integrate with electronic health records, other clinical systems

The contrast is striking. The recipe recommender can be playful and experimental, while the medical agent demands conservative design choices, extensive validation, and human oversight. This structured approach prevents both over-engineering (building unnecessary capabilities) and under-engineering (deploying inadequate agents). It makes trade-offs explicit: accepting lower autonomy to manage safety risks, or investing in expensive computational resources to meet temporal constraints.

Looking ahead: The evolving landscape of agents

As we build more sophisticated AI-powered applications, the agent abstraction becomes increasingly valuable. But its value lies not in the buzzword itself, but in the structured thinking it enables. By clearly separating what must be solved from how we choose to solve it, we can:

Communicate clearly across teams – product managers can specify problem-space requirements while engineers explore solution-space options

Evaluate systematically – distinguishing between difficult problems and poorly-designed agents

Evolve gracefully – as new technologies emerge (better models, new architectures), we can swap solution approaches while keeping problem definitions stable

Design responsibly – explicitly considering safety and alignment risks before they become afterthoughts

The 👋CIAO framework's emphasis on Agents as one of four core components (alongside Conversations, Interfaces, and Orchestration) reflects a fundamental shift in how we build software. No longer are we just coding procedures or training models – we're designing entities with increasing autonomy that must navigate complex, uncertain environments while maintaining alignment with human values and goals.

This shift requires new abstractions, new frameworks, and new ways of thinking. The agent-independent versus agent-dependent distinction is just one tool in this evolving toolkit, but it's a powerful one. It forces us to be honest about what we're asking our systems to do, and thoughtful about how we equip them to do it.

As you design your next AI-powered application, start with the problem space. Understand the inherent challenges before reaching for solutions. Then, and only then, explore the solution space with clear eyes about what capabilities your agents truly need. This discipline – separating the what from the how – will give you a head start in building the right sort of solution for the problem at hand.